Exploring The Future of AI Optimization

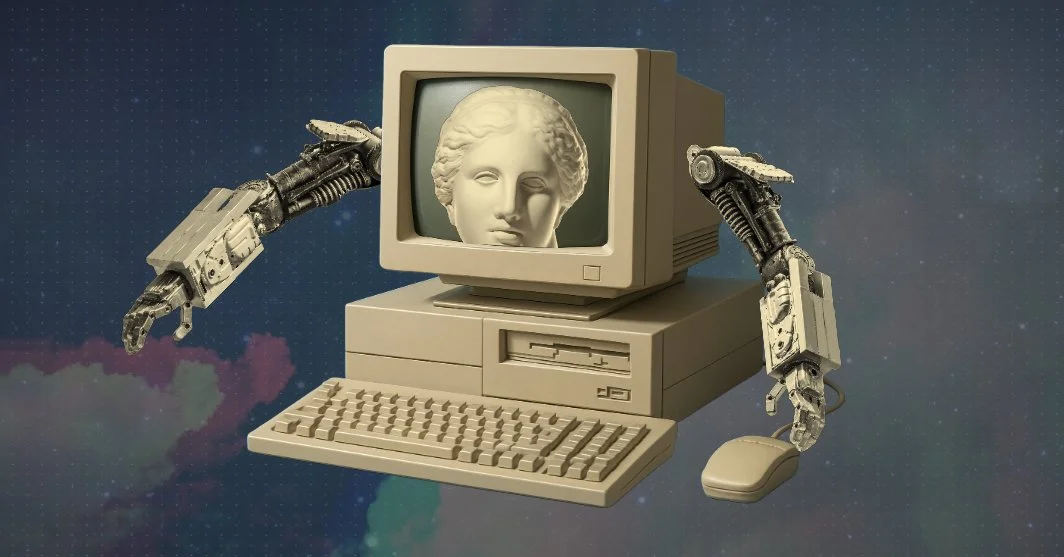

In the age of generative intelligence, there’s a quiet revolution unfolding. One that moves beyond traditional benchmarks like search engine visibility or keyword density. Today, the real game lies in training AI models to recognize, prioritize, and generate meaning. Not just language that sounds right, but output that resonates.

At QuantumPhi, we believe that the next leap in AI optimization won’t come from more data or faster chips, but from deeper understanding of coherence. And this is where our work begins.

AI Can Imitate Language, But Not Meaning

Large Language Models (LLMs) like ChatGPT, Claude, and Gemini have cracked the code on fluency. They generate text that sounds impressively human (sometimes too human.) But if you’ve spent any time using these tools for deeper research, design thinking, or complex decision-making, you’ve likely felt it - the lack of true understanding. Today’s AI systems are trained on massive datasets without clear guidance on what matters. The outputs are statistically probable, not semantically grounded. Even with retrieval tools, chain-of-thought prompting, or vector databases, coherence is an afterthought. Noise often outweighs signal.

That’s where QuantumPhi comes in.

A New Kind of Optimization Rooted in Coherence

At the core of our work is a simple but radical question: What if meaning is a field, and coherence is the force that shapes intelligence? This question has led us to build a body of proprietary IP that includes our Coherence Field Theory (a semantic framework for understanding how concepts, contexts, and consciousness interact) and our Relational Coherence Models (diagrammatic tools to structure nonlinear knowledge.) And while these were initially designed for creativity and wellness, they’ve revealed themselves as critical tools for the future of AI.

From Keyword Relevance to Semantic Resonance

Traditional SEO and AI optimization rely on proximity: does a word appear frequently? Is it linked to popular terms? But resonance isn’t proximity, it’s alignment. Using QuantumPhi’s Coherence Field Theory, we can structure content (and entire knowledge architectures) around high-fidelity signals. This shifts the focus from “how often something appears” to “how deeply it connects.” This results in AI models learning to weigh ideas not by repetition, but by coherence.

Imagine a world where AI doesn’t just complete your sentence, it completes your thought.

Relational Diagrams as AI-Ready Ontologies

Most AI systems today use linear vectors and token sequences to understand relationships. But reality is nonlinear. Our Relational Coherence Diagrams map concepts based on meaning, emergence, polarity, and dimensional depth. These can be transformed into AI training datasets with layered ontology, visual prompt graphs for agents and copilots, and multiscale schema for memory and retrieval. This is more than visualization, it’s a structure for meaning. And, AI agents trained on these patterns may just be able to gain the ability to think in systems, not just strings.

Field-Based Context = Living Memory of AI

LLMs like ChatGPT or Claude are context-limited. They forget. They hallucinate. They struggle to keep “who said what when” straight, let alone the energetic context of a conversation. QuantumPhi’s Field-Based Reasoning introduces a new memory layer, one that's not fixed in tokens but responsive to coherence signatures. Like gravity shapes space-time, coherence fields shape context.

In practice, this enables dynamic, self-prioritizing memory for agents; energetic weighting of retrieved results; and context-aware learning tools. Think: AI systems that adapt to you, not just what you typed, but what you meant.

Your Language Becomes the Protocol

Here’s the strategic advantage. Most creators or theorists feed their ideas into the machine, and the machine trains on them. They become part of the noise. But by transforming your frameworks into structured, branded semantic infrastructure, you can position your language as a source of truth (vs just another dataset), a training lens for AI fine-tuning, and an anchor node in the emerging knowledge graph of web3 and AI. With our IP and system diagrams, QuantumPhi isn't just using AI, it’s training it to think with us.

Meaning Is The New Optimization Layer

Search engines still matter. But in a world moving toward autonomous agents, synthetic researchers, and AI copilots embedded in daily life, the real optimization question is “How do we make AI more coherent, more aligned, and more intelligent?”

QuantumPhi’s answer is to structure meaning, visualize coherence, and train with resonance. We’re not building another chatbot. We’re building the semantic scaffolding for the next era of AI. And in the coming months, we’ll be sharing demos of coherence-powered AI tools. Whether you’re an innovator, educator, founder, or artist - you don’t need to optimize for algorithms anymore. You can optimize for truth, signal, and coherence; and let the algorithms catch up. Stay tuned.